Artificial intelligence (AI) is reshaping addiction therapy by improving relapse detection, personalizing treatment, and expanding access through apps and virtual tools. While it offers clear advantages, it also poses challenges such as privacy risks, over-reliance on technology, and reduced human connection, raising ethical concerns about autonomy, fairness, and accountability. Effective use requires self-management, in which individuals protect their data, balance digital and offline coping, and maintain control over their choices. Families can be supported by encouraging healthy use, respecting privacy, and integrating AI insights into supportive conversations. Communities—through clinics, schools, and recovery groups—can strengthen outcomes by making AI tools accessible, equitable, and culturally sensitive. Used wisely, AI can enhance recovery while keeping empathy and human connection at the center of care.

How AI Is Transforming Addiction Recovery Care

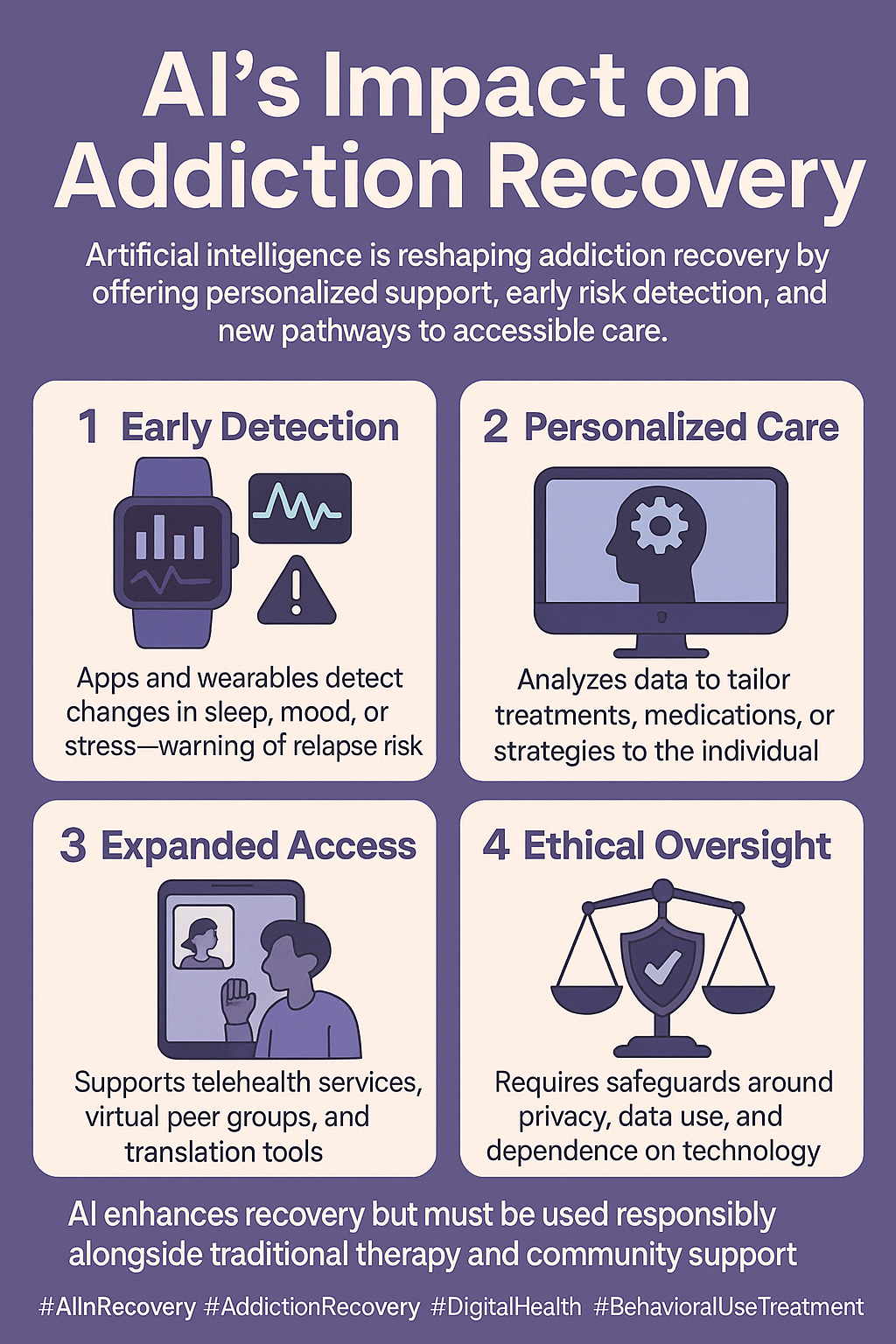

Artificial intelligence is becoming a powerful ally in addiction recovery care, offering new tools that complement traditional treatment and strengthen long-term support. Instead of replacing human connection, AI enhances it—providing early detection, personalized interventions, and expanded access to people who need recovery help the most. Here’s how AI is reshaping the landscape of addiction treatment.

🧠 Early Detection and Screening

AI-powered apps and wearable devices can detect early signs of relapse risk by monitoring mood, sleep patterns, heart rate, and stress levels. Machine learning models analyze these changes and flag when someone may be vulnerable, giving clinicians and individuals the chance to intervene early—before relapse occurs.

📱 Personalized Support Tools

AI-driven chatbots and virtual assistants offer 24/7 support, providing coping strategies, medication reminders, and motivational check-ins. These tools adjust to an individual’s progress, cravings, or stressors, making recovery guidance more timely and personalized.

🧾 Treatment Personalization

AI can sift through large amounts of medical, behavioral, and even genetic data to identify which treatments—medications, therapies, or support groups—are most likely to be effective for a specific patient. This targeted approach reduces the trial-and-error process and increases the likelihood of successful outcomes.

🏥 Clinical Support for Providers

Clinicians benefit from AI as well. Predictive models help identify patients who are at higher risk of relapse or who may need more intensive support. AI tools also streamline documentation and analyze patient data, allowing providers to focus more on direct patient care and less on administrative tasks.

🌐 Expanding Access to Care

AI-powered apps, telehealth platforms, and virtual peer-support groups help remove barriers related to cost, geography, or stigma. Language translation tools also help non-English-speaking individuals access recovery services, making care more inclusive and widely available.

🔄 Relapse Prevention and Long-Term Monitoring

Continuous AI monitoring creates real-time feedback loops that alert individuals and providers when risk factors rise. Wearable devices paired with AI can encourage healthier habits—like suggesting breathing exercises or movement when stress spikes—supporting long-term recovery.

⚖️ Ethical Considerations

With great potential comes responsibility. AI in addiction recovery raises important issues around privacy, consent, and data safety. Protecting sensitive health information and ensuring that AI tools remain unbiased and inclusive is essential for ethical implementation.

✅ Summary

AI is transforming addiction recovery by enhancing early detection, personalizing treatment, supporting clinicians, and expanding access to care. While it can’t replace the value of human support and therapeutic relationships, it strengthens them—creating a more proactive, responsive, and accessible system for individuals seeking long-term recovery.

The Hidden Risks of Using AI in Addiction Recovery Care

AI is becoming a growing part of addiction therapy, offering innovative tools that support screening, monitoring, and personalized treatment. But while the technology brings promise, it also introduces real challenges, limitations, and risks—especially when working with vulnerable populations. Understanding these concerns is essential to ensure AI is used safely and ethically in recovery care.

🔒 Privacy and Data Security

AI tools often collect highly sensitive information such as mood patterns, relapse indicators, biometrics, and behavioral data. If these systems are not securely designed, patient information could be exposed, shared, or misused. Teens and other vulnerable groups may not fully understand how their data is stored or who can access it, increasing the risk of unintended privacy violations.

⚖️ Bias and Equity Concerns

Because AI learns from existing data, it can also inherit biases hidden within that data. This may lead to inaccurate predictions or unequal treatment recommendations for individuals from marginalized communities. For example, if an algorithm is trained mostly on urban patient data, it may work poorly for rural populations—misclassifying risk or offering less practical guidance.

🤖 Lack of Human Connection

Addiction recovery relies heavily on empathy, trust, and meaningful therapeutic relationships. Over-reliance on AI—such as automated check-ins or chatbots—can make care feel cold or impersonal. While AI can offer quick support, it cannot replicate the emotional connection and nuanced understanding provided by human counselors, peers, or clinicians.

🧠 Over-Reliance on Technology

AI-based recovery tools are helpful, but depending on them too much can create new vulnerabilities. If someone relies on apps for coping strategies, reminders, or motivation, they may feel lost during tech failures, app outages, or device issues. This dependency can leave individuals feeling unsupported at critical moments.

🏥 Clinical Limitations

AI systems can struggle to interpret complex emotional experiences or distinguish between similar symptoms. For instance, an algorithm may misread stress-related insomnia as withdrawal insomnia, triggering the wrong intervention. False positives may cause unnecessary alarm, while false negatives could overlook early signs of relapse.

💰 Access and Cost Barriers

Not everyone has access to smartphones, wearable devices, or reliable internet. These digital gaps are especially prominent in rural communities, low-income families, and at-risk youth. When AI tools become standard in recovery care, those without access could fall further behind, deepening existing health inequities.

⚠️ Ethical and Legal Issues

AI raises unresolved questions:

- Who owns the patient’s data?

- Should AI predictions influence medical decisions?

- Who is responsible if an AI system makes a harmful mistake?

Without clear regulations, addiction recovery programs risk misusing technology or relying on tools that haven’t been adequately validated.

✅ Summary

AI can enhance addiction therapy, but it also brings significant disadvantages—privacy risks, bias concerns, loss of human connection, over-dependence, clinical limitations, access inequalities, and unresolved ethical issues. As AI continues to evolve, it must be used carefully, transparently, and alongside—not instead of—human support to ensure safe and equitable recovery care.

The Ethical Dilemmas of Using AI in Addiction Therapy

As artificial intelligence becomes more common in addiction treatment, it brings powerful possibilities—but also complex ethical dilemmas. Because AI tools work with vulnerable populations, sensitive health data, and life-or-death situations, every decision about how the technology is used carries weight. Understanding these dilemmas is essential for creating AI systems that support recovery without compromising human dignity, privacy, or fairness.

🔒 1. Privacy vs. Monitoring

AI tools can track mood, cravings, sleep, GPS location, or even social media activity to detect relapse risk. While this monitoring can save lives, it also raises significant privacy concerns.

The ethical tension: Should systems prioritize constant surveillance for safety, or protect personal privacy even if it increases the risk of relapse? Finding balance means being transparent about data use and ensuring patients fully understand what is being monitored and why.

⚖️ 2. Autonomy vs. Algorithmic Influence

AI-driven recommendations can nudge individuals toward specific treatments, medication options, or behavioral choices. This guidance can be helpful—but it risks undermining patient autonomy if the technology becomes too directive.

The ethical tension: How do we provide supportive recommendations without pressuring individuals or limiting their right to make their own choices, even when those choices carry risk?

🤖 3. Human Connection vs. Automation

AI chatbots and virtual assistants offer immediate, around-the-clock support, but they lack empathy, cultural understanding, and emotional nuance. Over-reliance on automated tools may reduce human interaction, which is essential in recovery.

The ethical tension: Should AI only supplement human care, or can it ethically replace some interactions in resource-limited settings? The risk is creating a system where vulnerable people receive “robotic care” instead of genuine human support.

🌍 4. Equity vs. Bias

AI learns from existing data, which may not represent all populations equally. This can produce biased predictions that disadvantage certain groups, such as rural communities, teens, or people of color.

The ethical tension: Is it moral to use AI tools that work better for some groups than others? Deploying AI without correcting these inequities risks worsening existing disparities in addiction care.

🏥 5. Responsibility and Liability

If an AI system misclassifies a patient, fails to detect relapse risk, or provides a harmful recommendation, who is accountable? Clinicians, developers, and health systems all play a role—but current regulations are unclear.

The ethical tension: Without clear accountability guidelines, mistakes can lead to legal and moral gray areas in high-risk scenarios.

💰 6. Access vs. Inequality

AI-enhanced treatment often requires modern smartphones, wearables, and internet access. This means wealthier or urban patients may benefit more, while low-income or rural individuals are left behind.

The ethical tension: Should healthcare systems subsidize access to AI tools, or would that divert resources from traditional therapies that are already proven to work?

✅ Summary

The ethical dilemmas surrounding AI in addiction therapy focus on privacy, autonomy, empathy, fairness, accountability, and access. While AI has the potential to strengthen recovery care, it must be implemented with caution and compassion. The goal is to harness technology without losing the human-centered, culturally sensitive, and equitable foundation that effective addiction treatment depends on.

Self-Management Strategies for Using AI Safely in Addiction Recovery

AI tools can play a meaningful role in addiction therapy—from tracking cravings to offering coping strategies—but they work best when individuals maintain control, set limits, and use the technology intentionally. Strong self-management ensures AI remains supportive, ethical, and balanced rather than overwhelming or intrusive. Here are key strategies to help individuals use AI wisely throughout their recovery journey.

🧭 1. Maintain Active Control and Autonomy

AI can guide recovery, but it should never take over the decision-making process.

- Set your own recovery goals and use AI apps or trackers only as helpers.

- Question AI recommendations, remembering that algorithms interpret patterns—not human nuance.

- Stay in the driver’s seat by using digital tools for reminders, journaling, or coping strategies while keeping final choices between you, your therapist, and your support team.

Maintaining autonomy ensures your recovery stays personal, grounded, and aligned with your values—not automated by a machine.

🔒 2. Protect Privacy and Data Security

Because AI tools often collect sensitive emotional and health data, privacy needs to be a top priority.

- Review app permissions and only share what’s truly necessary.

- Use secure devices with passwords or encryption to protect recovery information.

- Check the privacy policies and ensure the platform complies with HIPAA or similar standards before entering personal details.

Taking these steps prevents misuse of data and helps you feel more secure using AI in vulnerable moments.

📊 3. Track and Reflect Regularly

AI insights are most potent when paired with thoughtful reflection.

- Monitor progress using AI dashboards that track cravings, triggers, sleep, or mood.

- Reflect offline by combining digital insights with journaling or therapy discussions.

- Recognize patterns in how you engage with the tool—when it’s helpful, and when it starts to feel intrusive or overly controlling.

Reflection ensures that AI supports your awareness rather than replacing it.

🌱 4. Balance Tech with Human Support

AI should enhance—not replace—the human relationships that are central to recovery.

- Blend AI with therapy, counseling, or peer-support groups to keep emotional connection strong.

- Set boundaries by scheduling screen-free recovery activities, such as exercise, hobbies, or time with loved ones.

- Engage your community by sharing AI insights with sponsors, accountability partners, or mentors.

Balancing tech with human care keeps recovery relational, empathetic, and community-based.

⚖️ 5. Prevent Over-Reliance on AI

While helpful, AI should not become your only coping tool.

- Diversify strategies such as mindfulness, grounding, or journaling without digital assistance.

- Prepare a backup plan for moments when technology fails, like coping exercises that don’t require apps or the internet.

- Build resilience so that the skills practiced with AI gradually become independent habits.

Avoiding over-reliance ensures long-term strength and stability in recovery.

✅ Summary

Using AI in addiction therapy effectively means staying autonomous in decision-making, protecting your privacy, reflecting on insights, balancing digital tools with human support, and avoiding dependence on technology. When managed wisely, AI can enhance recovery by providing structure, awareness, and real-time support—while you remain entirely in charge of your journey.

Family Support Strategies for Using AI Safely in Addiction Therapy

As AI becomes more common in addiction therapy, families play a vital role in making sure the technology is used ethically, effectively, and in harmony with human connection. While AI can offer structure, insights, and reminders, recovery still depends on trust, empathy, and supportive relationships. When families understand how to guide the use of AI tools, they can strengthen recovery rather than complicate it. Here are powerful ways families can provide balanced, informed support.

👨👩👧 1. Encourage Healthy Use of AI Tools

Families can help individuals view AI as a supplement to recovery—not the main driver.

- Reinforce that AI is a supportive aid, not a decision-maker.

- Encourage consistent journaling, cravings tracking, or medication reminders through apps.

- Check in gently about AI insights—without judgment or pressure.

These habits help the person engage with technology in a steady and empowering way.

🔒 2. Promote Privacy and Trust

Respect and transparency are essential when AI collects sensitive emotional and health information.

- Let the individual decide how much AI-generated data they want to share.

- Learn privacy settings together to ensure data is stored safely.

- Avoid using AI insights to criticize or control the person.

Protecting privacy strengthens trust and ensures AI doesn’t harm the therapeutic relationship.

🧠 3. Integrate AI Insights into Family Conversations

AI-generated reports can be valuable conversation starters when used gently.

- Use mood or sleep data to open supportive dialogue—not as “proof” of problems.

- Ask open questions like, “Your app showed high stress—what helped you this week?”

- Use insights to reinforce progress, coping skills, and communication.

This keeps AI data functional and supportive rather than punitive.

⚖️ 4. Balance Human Connection with Tech Support

AI can’t replace empathy, love, and authentic connection.

- Remind the individual that AI is a tool—not a source of emotional support.

- Encourage ongoing therapy, peer groups, or counseling alongside technology.

- Share screen-free activities such as meals, walks, or mindfulness exercises.

Balancing tech with family connection strengthens emotional resilience.

📚 5. Learn and Educate Together

Understanding AI reduces fear and helps families use it responsibly.

- Attend workshops or webinars on AI in mental health and addiction care.

- Explore how apps work so everyone feels informed and empowered.

- Discuss what feels helpful and what feels intrusive.

Shared learning promotes confidence and reduces uncertainty.

🚦 6. Support Boundaries and Prevent Over-Reliance

Healthy boundaries prevent AI from becoming overwhelming or intrusive.

- Help monitor notification settings or screen time so AI doesn’t dominate daily life.

- Encourage offline coping strategies—like hobbies, breathing exercises, or journaling without devices.

- Reinforce the idea that recovery skills should eventually work independently of technology.

This helps build long-term resilience and reduces digital dependence.

✅ Summary

Family support strategies for AI-assisted addiction therapy include:

- Encouraging balanced, consistent use of digital tools

- Protecting privacy and establishing trust

- Using AI insights to foster conversation, not criticism

- Keeping human connection at the center of recovery

- Learning about AI together to strengthen confidence

- Supporting boundaries and offline coping skills

When families actively and thoughtfully participate, AI becomes a helpful addition—enhancing recovery without replacing human care, empathy, or connection.

Community Resource Strategies for Using AI Effectively in Addiction Therapy

AI can strengthen addiction recovery, but its actual impact is felt when it becomes part of a larger community support system—not just an individual tool. When clinics, schools, peer groups, and local organizations integrate AI responsibly, it creates a network of accessible, equitable, and human-centered recovery support. These community resource strategies help ensure AI enhances care for everyone, especially those who might otherwise be left behind.

🏥 1. Community Health Clinics and Recovery Centers

Community clinics are well-positioned to introduce AI tools safely and effectively.

- Integration with AI tools: Clinics can use AI-powered apps for screening, relapse prediction, and symptom tracking while securely sharing data with care teams.

- On-site training: Staff can teach individuals how to interpret AI insights without confusion or fear.

- Accessibility programs: Clinics and local partners can subsidize devices, data plans, or internet access so low-income individuals aren’t excluded from AI-supported recovery.

This ensures that AI helps expand care—not deepen disparities.

📱 2. Peer Support and Recovery Groups

Peer-based settings can enrich their community support with AI insights.

- AI-assisted groups: Meetings can incorporate app-tracked trends—like mood or cravings—as conversation starters.

- Virtual peer communities: AI-enhanced platforms offer 24/7 connection for those without local groups or transportation.

- Accountability networks: With consent, AI can send progress updates to sponsors, mentors, or peers, strengthening accountability and motivation.

These integrations help keep recovery connected, even between meetings.

🎓 3. Education and Prevention Programs

Schools and community organizations can use AI to support early intervention and digital literacy.

- Youth screening tools: AI helps identify at-risk teens early while keeping personal data private.

- Community workshops: Libraries, nonprofits, and faith-based groups can teach families how to blend AI with traditional recovery strategies.

- Digital literacy training: Helps individuals and families understand privacy, data bias, and safe AI use.

Education ensures people use AI intentionally, safely, and confidently.

⚖️ 4. Policy and Advocacy

Community leaders and advocacy groups play a key role in shaping ethical and equitable AI systems.

- Local government support: Funding AI-based apps, telehealth, and digital recovery platforms in underserved areas.

- Advocacy initiatives: Pushing for bias-free design and equitable access across cultural, linguistic, and socioeconomic groups.

- Ethical oversight boards: Community-led committees can review how local programs use AI to ensure transparency, privacy, and ethical practice.

Strong advocacy helps prevent misuse and protects vulnerable populations.

🤝 5. Faith-Based and Cultural Organizations

These organizations often serve as trusted community anchors.

- Culturally sensitive AI adaptation: Tools can be tailored with language translation and coping strategies aligned with cultural norms.

- Stigma reduction: Faith-based and cultural groups can normalize responsible AI use as a complement—not a replacement—for spiritual, emotional, and human support.

This makes recovery more inclusive and culturally relevant.

✅ Summary

Community resource strategies ensure AI in addiction therapy is:

- Accessible through clinics, schools, and nonprofits

- Connected with peer groups, mentors, and accountability networks

- Educated through digital literacy training and workshops

- Equitable by reducing digital divides and advocating for fair design

- Ethical through oversight and privacy protections

When embedded in community networks, AI shifts from being just an app to becoming part of a shared recovery safety net—one that strengthens support, expands access, and empowers individuals on their healing journey.

Frequently Asked Questions

Here are some common questions:

Question: What are examples of AI use in recovery?

Answer: Examples of how AI is being used in addiction recovery today, broken into categories for easy understanding:

🔍 1. Early Detection & Relapse Prediction

AI can identify warning signs before a relapse happens by analyzing patterns in behavior, mood, and health.

Examples:

- Wearables (Fitbit, Apple Watch) detect changes in heart rate, sleep, or stress linked to cravings.

- AI relapse prediction models that alert clinicians when a patient’s risk rises.

- Mood-tracking apps that use AI to notice patterns like isolation, insomnia, or heightened anxiety.

📱 2. AI-Powered Recovery Apps

Many recovery apps now include AI features to support daily sobriety.

Examples:

- ReSET-O & ReSET – FDA-approved digital therapeutics that use AI-driven modules to reinforce relapse-prevention skills.

- SoberGrid AI Peer Support – an AI chatbot offering emotional check-ins and coping strategies.

- Woebot – an AI mental health chatbot that helps users manage stress and cravings with CBT-based tools.

🧠 3. Personalized Treatment Plans

AI analyzes data from thousands of patients to predict the most effective treatment for a specific individual.

Examples:

- Algorithms that match patients with the proper medication (e.g., buprenorphine vs. naltrexone).

- Models predicting which therapy—CBT, contingency management, group therapy—will work best.

- Tailored recommendations based on genetics, health records, and behavior patterns.

🏥 4. Clinical Decision Support

AI assists clinicians by analyzing patient data and providing insights.

Examples:

- Electronic health record (EHR) alerts that flag high-risk patients.

- Tools that predict overdose risk using hospital and EMS data.

- AI systems that summarize patient progress and suggest treatment adjustments.

🌐 5. Virtual Peer Support & Online Communities

AI helps keep people connected and supported around the clock.

Examples:

- AI-moderated online recovery groups that remove harmful content and provide safe discussion spaces.

- 24/7 AI chatbots for those who can’t attend in-person meetings.

- Innovative matching tools that connect individuals with peers going through similar experiences.

🎤 6. Therapy Enhancement & Skill Building

AI complements (not replaces) therapy by offering tools to practice recovery skills.

Examples:

- CBT-based AI chatbots that help individuals manage negative thinking in real time.

- AI-guided mindfulness sessions that adapt to stress levels.

- Speech analysis tools that detect emotional distress during telehealth calls.

🔒 7. Harm Reduction Tools

AI can help prevent overdoses and improve safety.

Examples:

- AI systems are monitoring drug-checking results to detect trends in fentanyl contamination.

- Machine learning models predicting overdose hotspots for EMS and public health.

- Smart syringes that track injection patterns to identify dangerous use.

🚀 8. Reintegration & Daily Life Support

AI can help individuals maintain stability as they rebuild their lives.

Examples:

AI reminders for medications, appointments, or recovery meetings.

Job-matching tools that suggest sober-friendly workplaces.

Budgeting or time-management apps that help structure daily routines.

Question: Provide an example of AI not compatible with recovery.

Answer: Examples of AI that are not compatible with addiction recovery, either because they harm emotional safety, increase relapse risk, or conflict with therapeutic goals:

🚫 Examples of AI Not Compatible With Recovery

1. AI That Encourages Addictive Behaviors

Some AI systems are designed to maximize screen time or engagement, which can trigger cravings, stress, or compulsive use.

Examples:

- Algorithmic social media feeds that push triggering content (parties, substance use, nightlife).

- AI-recommended videos on YouTube/TikTok that show drug use, drinking, or glamorized partying.

- Gaming algorithms that promote binge-play behavior.

Why it’s harmful:

These systems reinforce impulsivity, escapism, and isolation—all common relapse triggers.

2. AI That Uses Manipulative or Predatory Design

Some AI-driven tools manipulate emotions to keep users engaged.

Examples:

- Chatbots designed for emotional dependency (simulated romance/companionship).

- AI “friend” or “partner” apps that blur emotional boundaries.

- Gambling or casino apps with AI-driven reward loops.

Why it’s harmful:

Recovery requires real human connection and stable emotional boundaries—not AI-driven attachment or dopamine traps.

3. AI That Collects Excessive or Unsafe Personal Data

Apps that gather large amounts of sensitive information without transparency can harm emotional safety and privacy.

Examples:

- AI mental health apps with unclear data-sharing policies.

- AI that uses GPS tracking without consent.

- Tools that sell personal behavioral data to advertisers.

Why it’s harmful:

Loss of privacy increases shame, anxiety, and mistrust—dangerous for people in early recovery.

4. AI That Gives Incorrect or Harmful Mental Health Advice

Unregulated AI tools may provide misleading, dismissive, or unsafe advice.

Examples:

- AI chatbots that give generic or inaccurate crisis responses.

- Tools that misidentify withdrawal symptoms, mood states, or medical risks.

- AI that suggests coping strategies that conflict with clinical advice.

Why it’s harmful:

People in recovery need accurate guidance—incorrect advice can increase relapse risk or delay urgent care.

5. AI Not Designed for Vulnerable Populations

Some tools are too complex, triggering, or overstimulating for individuals with addiction or trauma histories.

Examples:

- Highly gamified mental health apps that trigger compulsive behavior.

- Overly technical dashboards that cause overwhelm or frustration.

- AI that sends frequent alerts increases anxiety.

Why it’s harmful:

Recovery tools must be simple, calming, and supportive—not stressful.

6. AI That Replaces, Instead of Supports, Human Connection

AI systems meant to replace therapy, mentorship, or peer support are NOT compatible with recovery.

Examples:

- AI-only “therapy bots” are replacing real counseling.

- AI alternatives to in-person or virtual support groups.

- Apps that discourage sharing with family or clinicians.

Why it’s harmful:

Recovery thrives on empathy—AI cannot replicate lived experience or relational healing.

7. AI With Strong Bias or Cultural Mismatch

Biased algorithms can misjudge risk or offer inappropriate guidance.

Examples:

- AI that mislabels people in rural areas as “low risk” due to limited data.

- AI that doesn’t understand cultural communication styles.

- Tools that only work well for specific demographic groups.

Why it’s harmful:

Biased AI undermines trust and leads to improper or unsafe recommendations.

Summary: AI NOT Compatible With Recovery

❌ AI that triggers addictive behavior

❌ Manipulative, dopamine-based engagement algorithms

❌ Unsafe or invasive data-collecting apps

❌ AI that gives inaccurate or harmful advice

❌ Overstimulating or confusing technology

❌ Tools replacing human connection

❌ Biased or non-inclusive AI models

Question: How should AI be used with therapy or peer support groups?

Answer: AI can be a powerful addition to therapy and peer support—but it should never replace the human connection that recovery depends on. Here’s how to use AI safely, ethically, and effectively alongside existing support structures:

1. Use AI as a Supplemental Tool—Not a Replacement

AI should enhance recovery, not replace counselors, sponsors, or group meetings.

How this looks in practice:

- AI app tracks cravings → you discuss the pattern in therapy.

- Chatbot offers coping techniques → you share what worked in the group.

- AI flags stress or sleep issues → your therapist explores the root causes.

AI provides data and reminders; humans offer empathy, insight, and accountability.

2. Bring AI-Generated Insights Into the Session

AI tools can track mood, triggers, stress, or sleep patterns. These insights can strengthen therapy discussions.

Examples:

- “My app showed three high-stress days—can we explore what triggered them?”

- “My cravings spiked at night this week—what strategies can I use?”

- “The app flagged low sleep—how does that affect my recovery?”

This turns raw data into a meaningful reflection.

3. Use AI to Improve Accountability in Peer Groups

Peer support thrives on honesty and routine. AI can help reinforce those habits.

Examples:

- Sharing weekly AI summaries during check-ins.

- Using mood trackers as conversation starters.

- Allowing AI to send optional updates to a sponsor (with consent).

The goal: more connection, not surveillance.

4. Use AI Between Sessions for Skill Practice

Recovery often requires practicing coping skills outside of therapy.

AI can help with:

- CBT-style thought reframing

- Guided breathing and mindfulness

- Stress or craving management prompts

- Meeting reminders or daily check-ins

This fills the gaps between human support sessions.

5. Keep Emotional Support Human-Centered

AI can misunderstand emotions—and cannot replace empathy.

Avoid:

- Using AI as your primary crisis resource

- Allowing AI chats to take the place of a sponsor contact

- Leaning on AI for deep emotional disclosures

Do:

- Use AI for structure and tools

- Use therapists and peers for emotional connection

6. Set Clear Boundaries Around AI Use

Healthy boundaries prevent over-reliance.

Examples:

- Use AI during specific times (morning reflections, evening check-ins).

- Turn off constant notifications.

- Share only what you’re comfortable sharing.

AI should never feel intrusive or controlling.

7. Ensure Everyone Understands the Tool

For therapy or groups using AI collectively:

- The therapist should know how the tool works.

- The group should discuss privacy and sharing boundaries.

- Everyone should consent before incorporating AI data.

This maintains trust and transparency.

Summary

AI should be used with therapy and peer groups by:

- Supporting—not replacing—human care

- Bringing insight and structure to discussions

- Providing coping tools between sessions

- Improving accountability (with consent)

- Maintaining strong boundaries

- Keeping emotional healing centered on people

When used intentionally, AI becomes a helpful companion—while therapy and community remain the heart of recovery.

Conclusion

AI offers powerful opportunities to improve addiction therapy by detecting relapse risks early, personalizing care, and expanding access to support. Yet, its disadvantages—such as privacy concerns, over-reliance on technology, and potential loss of human connection—require careful attention. Ethical dilemmas around autonomy, fairness, and accountability further highlight the need for responsible use. Success depends on a shared effort: individuals practicing self-management to stay in control, families providing supportive oversight while respecting privacy, and communities ensuring access, equity, and cultural sensitivity. When balanced with empathy and human connection, AI can serve as a valuable complement to traditional therapy, enhancing recovery while safeguarding dignity and trust.

Video: 5 Secrets to Successful Addiction Recovery with AI